Move Your Bugs to the Left

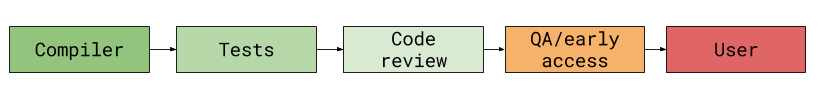

We all want to ship bug-free software. No-one wants to be the person that introduced a show-stopping bug the week before release. To help with this, I’ve come up with a simple way to visualise the points in the development process that you can find bugs.

The further to the left of that diagram a bug is found, the happier everyone will be. The worst-case scenario is for a user to discover a bug, shown on the far right.

“To the left, to the left” — Beyonce

What follows is advice to help you catch bugs early. I’ll start at the far left and work my way to the right.

# Static analysis

“Static analysis” tools inspect code without executing it in order to spot problems and inefficiencies at compile time. Let’s look at an example in Java.

class Test {

public static void main(String ...args) {

int[] a = {1, 2, 3};

int[] b = {1, 2, 3};

System.out.println(a.equals(b) ? "woot" : "noot");

}

}In Java, the String class has conditioned us to think that the .equals()

method compares two objects to make sure their values are the same. That’s not

true with arrays. With arrays, .equals() compares references, so the above

code snippet unintuitively prints “noot” because a and b are two distinct

objects.

ErrorProne is a Java static analysis tool from Google. It spots this error and many others, at compile time, and fails the compilation.

If your language has static analysis tools, use them and listen to their advice.

Almost every static analysis tool is going to produce false positives, and will have a mechanism to silence particular warnings in particular parts of your code. Consider the warning very carefully before silencing it. Setting a precedent of silencing the static analyser can lead to preventable bugs making it to your users.

# Compiler checks

The previous example showcased a tool that’s separate to the language’s compiler, but a lot of languages have useful checks built-in.

The Go compiler performs a bunch of checks by default that aim to eliminate common bugs. For example, if you don’t use a variable after declaring it, the compiler will produce an error and your compilation will fail. This works well when combined with Go’s error handling:

package main

import (

"fmt"

"strconv"

)

func main() {

val, err := strconv.Atoi("hello")

fmt.Println(val)

}This example doesn’t compile, because we’ve defined err but not used it. To

remedy this, we either have to do something with the error, or declare that we

don’t want care about it by renaming it _, which is a special symbol that’s

exempt from Go’s use checks.

val, _ := strconv.Atoi("hello")This protects us against forgetting to handle the error, but falls short when we have multiple error-producing function calls in the same scope:

package main

import (

"fmt"

"log"

"strconv"

)

func main() {

_, err := strconv.Atoi("1")

if err != nil {

log.Fatal(err)

}

val, err := strconv.Atoi("uh oh!")

fmt.Println(val)

}Sadly the compiler doesn’t save us here, and we end up printing out an invalid value.

Another renowned example is the -Wall -Werror combination of flags that C

compilers accept. This enables all compiler warnings and treats all warnings as

compilation errors.

Less well known, though, is the -Wextra flag. Despite its name, -Wall

doesn’t enable all of the warnings the compiler can show you.

#include <stddef.h>

#include <stdint.h>

int main(void) {

size_t n = SIZE_MAX;

int P[n];

for (int i = 0; i < n; i++) {

P[i] = 0;

}

return P[0];

}Even when compiled with -Wall -Werror, the above code passes through both GCC

and Clang with no complaints. But it’s incorrect. Can you see why?

Compiling with -Wall -Wextra -Werror sheds light on the situation:

/tmp/test.c:7:27: error: comparison of integers of different signs: 'int' and

'size_t' (aka 'unsigned long') [-Werror,-Wsign-compare]

for (int i = 0; i < n; i++) {

~ ^ ~

1 error generated.This mixed-sign comparison is undefined behaviour and causes anything from segfaults to infinite loops. It’s also completely avoidable with the right compiler flags.

Turn on all of the warnings your compiler can give you, and listen to them. Similar to static analysis, you’ll be able to silence certain warnings if you don’t think they apply. Do this sparingly, and with good reason.

# Type systems

A common problem encountered in dynamic languages is passing the wrong data type to a function.

def abs(n):

if n >= 0:

return n

return -nIt’s clear that this will explode if you pass it a string.

Even worse, though, is code that doesn’t explode when given the wrong data. Instead, it will silently compute an invalid result and pass it on to explode somewhere else in your code. When this happens, tracking down the root cause of the problem can take a very long time.

def sum(things):

if things == None:

return None

if len(things) == 0:

return 0

total = things[0]

for thing in things[1:]:

total += thing

return total

sum([1, 2, 3]) # 6Looks reasonable. It even has checks at the top to do something sensible with some common problematic values. So what’s the problem?

sum("hello")This returns the string "hello". Why? Because len works on strings,

iterating over a string like that in Python yields each successive character,

and the + operator on strings does concatenation. This is clearly not what the

function was intended to do. This problem is avoidable if we specify the type

we’re expecting ahead of time.

Prefer using languages with type systems for writing code that needs to be correct. If your language does not have a type system, defensively check that the data you’ve been passed is correct at runtime, and throw an error if it isn’t.

This isn’t to say that type systems remove all possibility of passing incorrect data to a function. You may still need to perform validity checks on things that are passed in, e.g., to check for null values.

# Unit testing

The next best place to catch bugs in your code is in unit tests. Unit testing is the practice of writing code that calls code you’ve written with various arguments and in various conditions and makes assertions about the result. For example, let’s say we wrote the following function:

public int abs(int i) {

return i < 0 ? -i : i;

}We could write the following unit tests to assert that it’s doing the right thing:

@Test

public void positive() {

assertEquals(abs(10), 10);

}

@Test

public void negative() {

assertEquals(abs(-10), 10);

}These two tests cover our basic use case, and even give us 100% “code coverage," which means all of the branches in our code have been taken. Does that mean our code is correct?

Sadly not. There’s a problem. It’s subtle, but take a minute and see if you can spot it.

One of the things we need to make sure we do in our unit tests is give our code

input that’s likely to cause problems, or input that we know will trigger an

error. With integers, there are a number of values known to cause problems: 0,

-1, Integer.MAX_VALUE and Integer.MIN_VALUE.

Our code doesn’t handle Integer.MIN_VALUE well. abs(Integer.MIN_VALUE)

returns -2147483648, which is a clear violation of how abs should work.

Figuring out why this happens is left as an exercise to the reader1.

The twos complement of b1000 is b1000, so the smallest negative number always negates to itself. This is because any integer range will always have a higher magnitude smallest number than largest, e.g. the range -128 to 127 cannot contain 128.

Make sure your unit tests exercise your code with a wide variety of data. Don’t be tricked into thinking 100% coverage means you’ve written all of the tests you need to write.

# Mutation testing

Complementary to unit testing, mutation testing is the practice of automatically modifying parts of your source code to see if doing so breaks any of your tests.

Reusing our abs function defined earlier:

public int abs(int i) {

return i < 0 ? -i : i;

}A typical mutation would be to either flip the comparison operator from < to

>, or replace the condition altogether:

public int abs(int i) {

return false ? -i : i;

}If our tests fail, we say that this mutation as been “killed.” If they pass, the mutation “lived” and needs to be killed.

Mutation testing is a good way to make sure that your unit tests are catching problems in your code.

pitest is a popular mutation testing library for JVM languages, and if you search for “<your language> mutation test” you’ll likely find something relevant to you. I will note, though, that mutation testing is quite a new idea and hasn’t caught on in all languages just yet. You may struggle to find a ready-made solution.

# Integration testing

Unit testing is an essential tool for preventing bugs, but it’s not enough on its own. You also need to test your code in the same way that the user is going to be using it.

Enter integration testing.

Let’s say we’ve written a command-line program that takes a file and replaces every vowel with the letter “o”. The program would be invoked something like this:

$ oify input.txt output.txtMost languages have a way to invoke commands like this, which makes testing our program simple. The test would craft an input file, write it to disk, run the command, and then assert that the output file only contains the vowel “o”.

Perhaps your program can also read from stdin and/or write to stdout in the

absence of the respective arguments. These are all things you would want to

test, along with what happens when invalid combinations of arguments or an

empty/non-existent file is passed in. Testing that your error handling is

correct is as important as testing that things work as expected.

Any interaction that you expect your user to have with your code should have a corresponding integration test.

Sometimes that can be quite tricky. If you’re creating a website and want to test that the right things happen when a user submits a form on that website, you wouldn’t need to poll many web developers to find one that will tell you that this is a nightmare. This is especially true if you’re making heavy use of Javascript. Browsers are large, complex programs and trying to emulate their behaviour will yield inconsistent results. The more moving parts you’re trying to test, the more difficulty you will have.

# Code review

After writing unit tests, integration tests and making sure your compiler and static analysis tools are happy, your next line of defence is the code review. In its simplest form, a code review involves another person reading over your code and checking that it makes sense. Some companies have different types of review, for example, a review from someone with in-depth knowledge of the existing codebase plus a review from someone with in-depth knowledge of the language or technologies you’re using.

Either way, it’s impossible to deny the value that a second set of eyes can provide for your code. I found it astonishing how many bugs and inefficiencies people were able to find in my code when I first started working at a company that practised regular code review. It’s very humbling and serves as an excellent way to learn from others in your team.

Make sure all code checked in to your code base goes through review from at least one other team member.

Some people find these reviews tedious. It’s not uncommon to run into the same problem being made again and again. When this happens, it can be a sign that an API is difficult to use or not well documented. A useful approach that the Go community has taken to this problem is to compile a list of code review comments that reviewers can refer back to when they see people making common mistakes.

# Style guides

A common component of code reviews is a “style guide.” This is often in the form of a list of dos and don’ts for a given language or technology, with explanations for each recommendation.

A good example of this is Google’s Python style guide. Let’s take a look at one of the points:

List Comprehensions: Okay to use for simple cases.

Pros:

Simple list comprehensions can be clearer and simpler than other list creation techniques. Generator expressions can be very efficient, since they avoid the creation of a list entirely.

Cons:

Complicated list comprehensions or generator expressions can be hard to read.

It goes on to show some examples of good and bad list comprehensions.

Good:

squares = [x * x for x in range(10)]Bad:

result = [(x, y) for x in range(10) for y in range(5) if x * y > 10]This shows that there’s a fine line to tread. You may be thinking that it’s too strict, and the “bad” example is perfectly fine. If using a nested list comprehension makes your code more readable, you can argue that with your reviewer. Style guides are guides, and all this one is saying is that you should prefer simple list comprehensions over complex ones. Condensing as much as you possibly can into one line is rarely a good idea. Remember, code is there to be read.

Another benefit of having a style guide is that, if you’re strict about following it, your code will be consistent. This reduces the cognitive overhead of understanding your code, reducing the chance of introducing bugs.

Pick a sensible style guide for the language you’re using an enforce it during code review. If you don’t agree with parts of the style guide you’ve found, you don’t have to follow them. It’s less about following it to the letter, and more about consistency.

# QA and early access

Some companies have a quality assurance (QA) department. This will be a team of people whose job is to test a release for correctness. How they do this differs a lot from company to company, from the old-school “run through a list of common user operations manually and tick some boxes” to automating that process entirely.

If you can’t afford a dedicated QA department, or you want an extra layer of reassurance, you could release “early access” builds. Users would be given the opportunity to opt-in, and the benefit to them would be that they can use new features before anyone else. The downside is that there’s no promise the software is stable.

This is a great middle ground. Users don’t expect everything to work, and you get to release to a motivated subset of your users that will be very vocal about problems in your software. Win-win.

Release early-access builds of your software to a subset of opted-in users. Listen to and action their feedback before releasing to everyone.

# User feedback

Let’s say you follow all of the above advice and pour lots of your energy into weeding out bugs in your code, but one slips through the net and causes your users pain. They will tell you! Provided you have an easy-to-find bug tracker or user feedback mechanism, users will let you know what happened and what they think should have happened.

Listen to them, and act on their advice.

A well-maintained, active bug tracker will be an invaluable resource not only of what your users want but how they’ve worked around problems. This is useful for anyone else with the same problem and acts as a form of secondary documentation when the primary documentation fails them.

Make it easy for users to give you feedback and ask you questions. Listen to them when they do.

If a user reports a bug that none of the previous steps caught, fix the bug and introduce checks further to the left to stop the same bug from reappearing.

# Postmortem

You fucked up big. Maybe you had a bug that leaked users’ personal details, or your service was down for an extended period of time. Whatever it was, users are angry and want to know what happened.

A “postmortem” is a document that says, in detail: what went wrong, how it came to be, how it was resolved at the time, and how you’re going to make sure it doesn’t happen again. The document doesn’t need to be released to the public, but doing so will help to restore any user trust you lost after the incident. Users value transparency, demonstrated wonderfully in the comment section of the now-famous GitLab database outage postmortem.

Over time, you might accrue bunch of changes to your code that do unobvious things. Maybe you check that a certain byte doesn’t appear in a blob, or that a string doesn’t start with a specific combination of characters. There’s a temptation, as knowledge of the incidents that necessitated these changes fade into myth, to remove these checks.

Writing postmortems helps your colleagues understand why your code is the way it is and stops you from making the same mistakes twice. Publishing postmortems helps restore user trust lost in large incidents.

Writing postmortems can be tricky, but following up on them is even more so. You need to come up with a list of action items to prevent future recurrence of the same problem and treat completing them as a top priority.

# Conclusion

There’s no guaranteed method of writing bug-free code, but following all of the above steps should go some way to reducing the number of bugs you ship to users.

From now on, when you’re writing code, ask yourself: “How far to the left could a bug be caught in this piece of code? Can I rewrite it in such a way that it’s caught further to the left?”